Peer-Reviewed Publications

Ecological and Coevolutionary Dynamics in Modern Markets Yield Nonstationarity in Market Efficiencies

Colin M. Van Oort, John H. Ring IV, David Rushing Dewhurst, Christopher M. Danforth, Brian F. Tivnan

Complexity (June 2022)

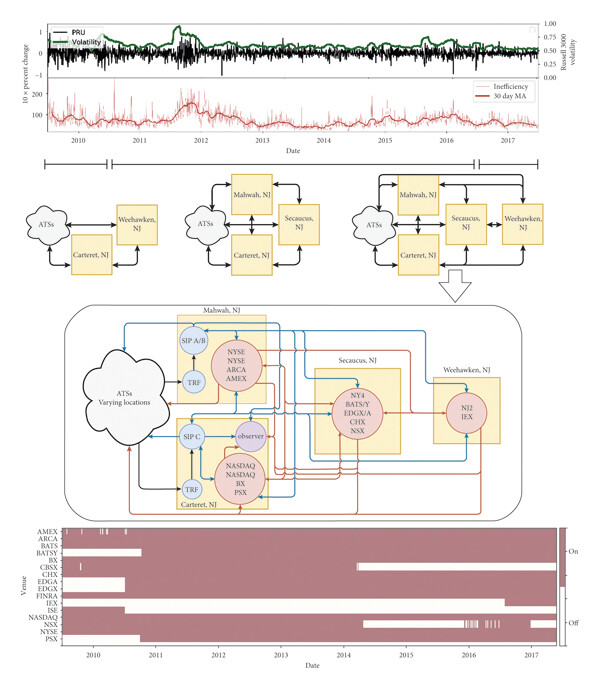

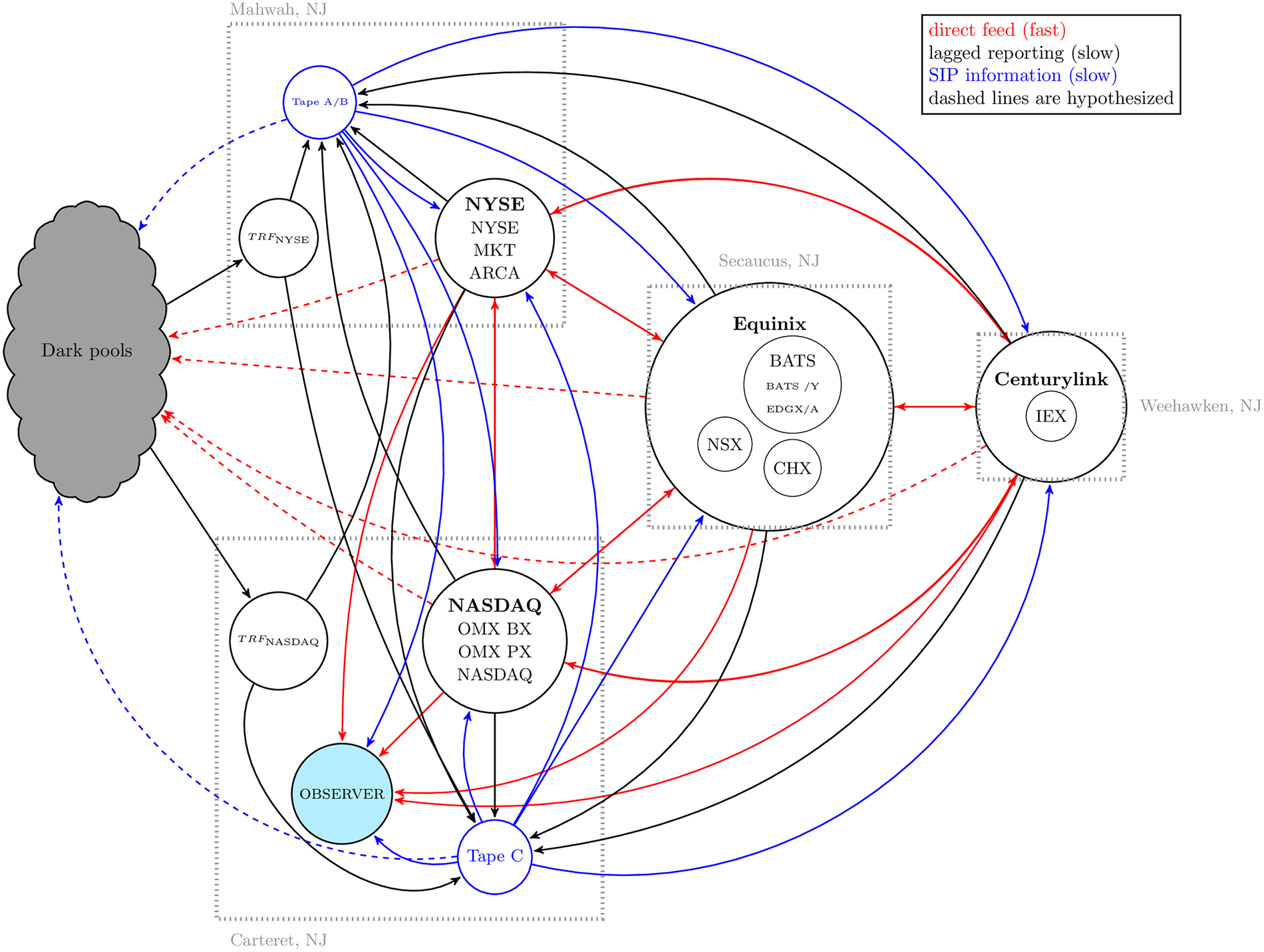

The U.S. stock market is one of the largest and most complex marketplaces in the global financial system. Over the past several decades, this market has evolved at multiple structural and temporal scales. New exchanges became active, and others stopped trading, regulations have been introduced and adapted, and technological innovations have pushed the pace of trading activity to blistering speeds. These developments have supported the growth of a rich machine-trading ecology that leads to qualitative differences in trading behavior at human and machine time scales. We conduct a longitudinal analysis of comprehensive market data to quantify nonstationary dynamics throughout this system. We quantify the relationship between fluctuations in the number of active trading venues and realized opportunity costs experienced by market participants. We find that information asymmetries, in the form of quote dislocations, predict market-wide volatility indicators. Lastly, we uncover multiple micro-to-macro level pathways, including those exhibiting evidence of self-organized criticality.

Efficient Differentially Private Secure Aggregation for Federated Learning via Hardness of Learning with Errors

Timothy Stevens, Christian Skalka, Christelle Vincent, John H. Ring IV, Samuel Clark, Joseph P. Near

31st USENIX Security Symposium (USENIX Security 2022)

Federated machine learning leverages edge computing to develop models from network user data, but privacy in federated learning remains a major challenge. Techniques using differential privacy have been proposed to address this, but bring their own challenges. Many techniques require a trusted third party or else add too much noise to produce useful models. Recent advances in secure aggregation using multiparty computation eliminate the need for a third party, but are computationally expensive especially at scale. We present a new federated learning protocol that leverages a novel differentially private, malicious secure aggregation protocol based on techniques from Learning With Errors. Our protocol out performs current state-of-the art techniques, and empirical results show that it scales to a large number of parties, with optimal accuracy for any differentially private federated learning scheme.

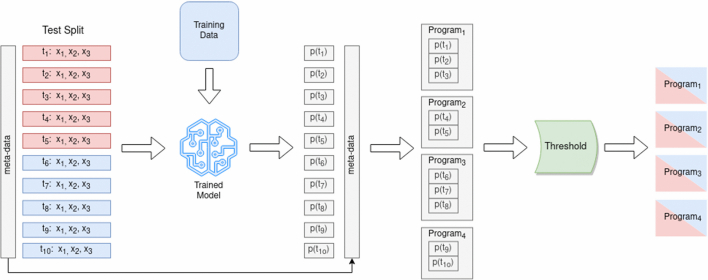

Methods for Host-based Intrusion Detection with Deep Learning

John H. Ring IV, Colin M. Van Oort, Samson Durst, Vanessa White, Joseph P. Near, Christian Skalka

Digital Threats: Research and Practice, Volume 2, Issue 4 (December 2021)

Host-based Intrusion Detection Systems (HIDS) automatically detect events that indicate compromise by adversarial applications. HIDS are generally formulated as analyses of sequences of system events such as bash commands or system calls. Anomaly-based approaches to HIDS leverage models of normal (a.k.a. baseline) system behavior to detect and report abnormal events and have the advantage of being able to detect novel attacks. In this article, we develop a new method for anomaly-based HIDS using deep learning predictions of sequence-to-sequence behavior in system calls. Our proposed method, called the ALAD algorithm, aggregates predictions at the application level to detect anomalies. We investigate the use of several deep learning architectures, including WaveNet and several recurrent networks. We show that ALAD empowered with deep learning significantly outperforms previous approaches. We train and evaluate our models using an existing dataset, ADFA-LD, and a new dataset of our own construction, PLAID. As deep learning models are black box in nature, we use an alternate approach, allotaxonographs, to characterize and understand differences in baseline vs. attack sequences in HIDS datasets such as PLAID.

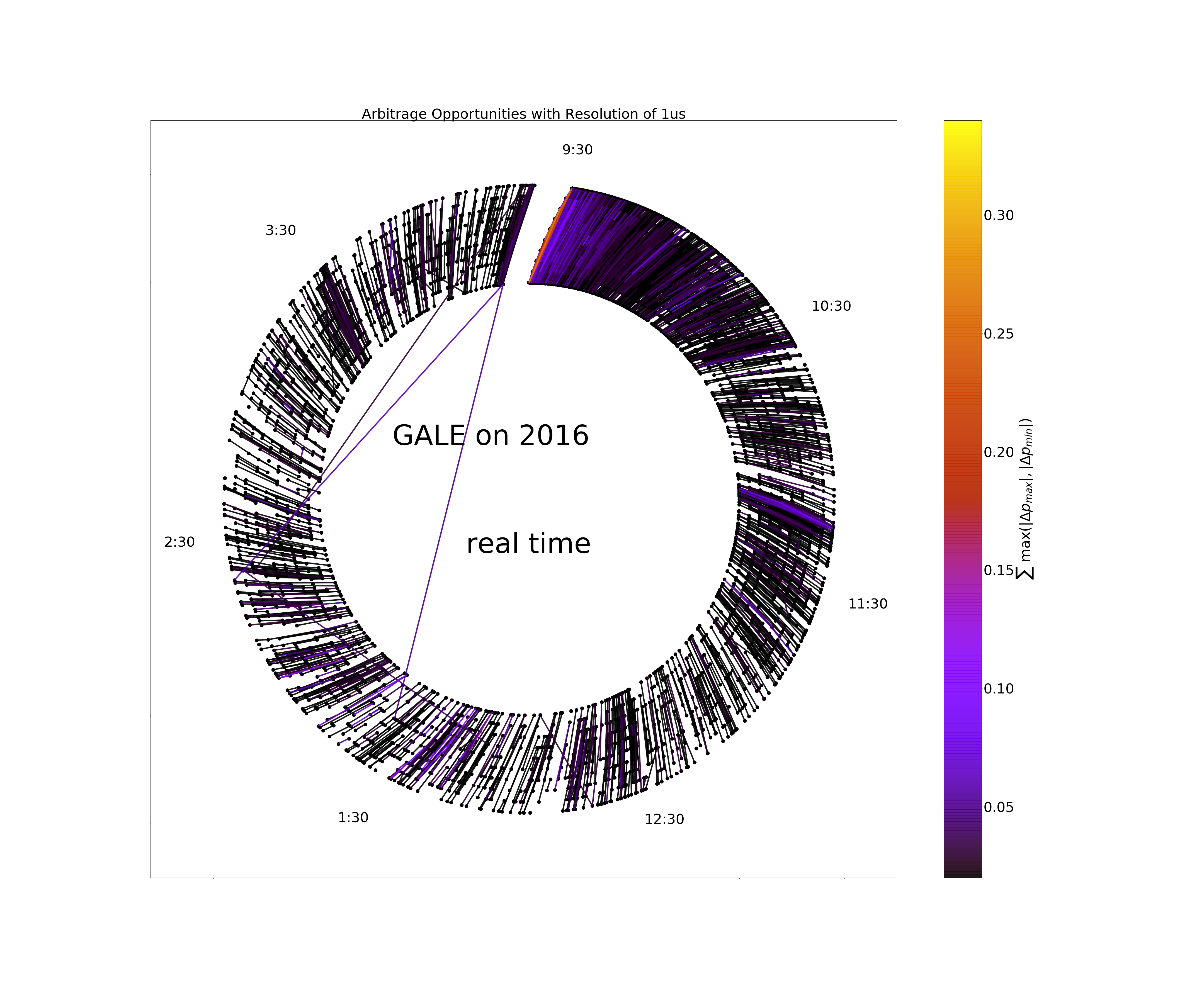

Fragmentation and Inefficiencies in the U.S. Equity Markets: Evidence from the Dow 30

Brian F. Tivnan, David Rushing Dewhurst, Colin M. Van Oort, John H. Ring IV, Tyler J. Gray,

Brendan F. Tivnan, Matthew T. K. Koehler, Matthew T. McMahon, David Slater, Jason Veneman,

Christopher M. Danforth

PLOS ONE 15(1): e0226968 (January 2020)

Using the most comprehensive source of commercially available data on the US National Market System, we analyze all quotes and trades associated with Dow 30 stocks in 2016 from the vantage point of a single and fixed frame of reference. Contrary to prevailing academic and popular opinion, we find that inefficiencies created in part by the fragmentation of the equity market place are widespread and potentially generate substantial profit for agents with superior market access. Information feeds reported different prices for the same equity, violating the commonly-supposed economic behavior of a unified price for an indistinguishable product more than 120 million times, with “actionable” dislocation segments totaling almost 64 million. During this period, roughly 22% of all trades occurred while the SIP and aggregated direct feeds were dislocated. The current market configuration resulted in a realized opportunity cost totaling over $160 million when compared with a single feed, single exchange alternative a conservative estimate that does not take into account intra-day offsetting events.

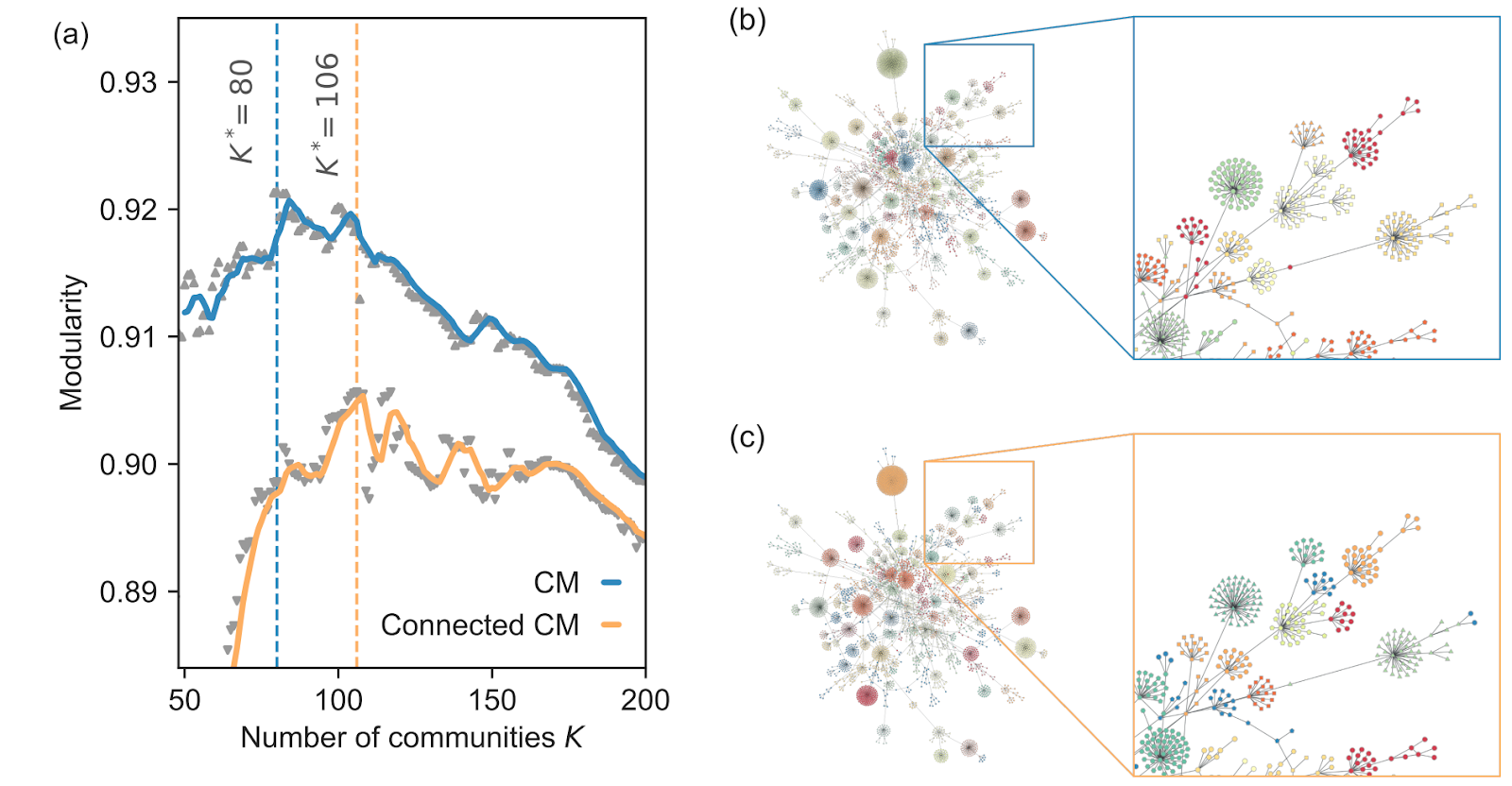

Connected graphs with a given degree sequence: efficient sampling, correlations, community detection and robustness

John H. Ring IV, Jean-Gabriel Young, Laurent Hébert-Dufresne

Proceedings of NetSci-X 2020

Random graph models can help us assess the significance of the structural properties of real complex systems. Given the value of a graph property and its value in a randomized ensemble, we can determine whether the property is explained by chance by comparing its real value to its value in the ensemble. The conclusions drawn with this approach obviously depend on the choice of randomization. We argue that keeping graphs in one connected piece, or component, is key for many applications where complex graphs are assumed to be connected either by definition (e.g. the Internet) or by construction (e.g. a crawled subset of the World-Wide Web obtained only by following hyperlinks). Using an heuristic to quickly sample the ensemble of small connected simple graphs with a fixed degree sequence, we investigate the significance of the structural patterns found in real connected graphs. We find that, in sparse networks, the connectedness constraint changes degree correlations, the outcome of community detection with modularity, and the predictions of percolation on the ensemble.

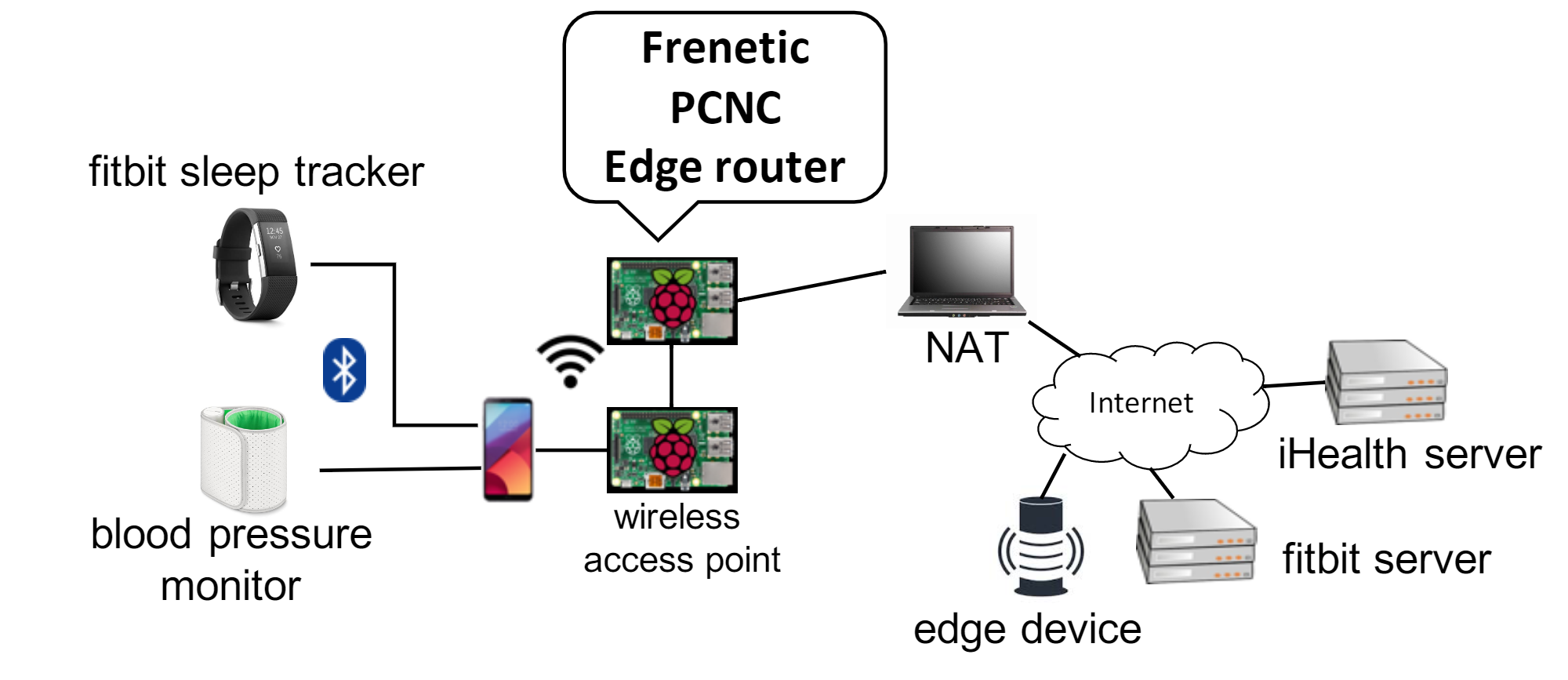

Proof-Carrying Network Code

Christian Skalka, John H. Ring IV, David Darias, Minseok Kwon, Sahil Gupta, Kyle Diller, Steffen Smolka, Nate Foster

Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security

Computer networks often serve as the first line of defense against malicious attacks. Although there are a growing number of tools for defining and enforcing security policies in software-defined networks (SDNs), most assume a single point of control and are unable to handle the challenges that arise in networks with multiple administrative domains. For example, consumers may want want to allow their home IoT networks to be configured by device vendors, which raises security and privacy concerns. In this paper we propose a framework called Proof-Carrying Network Code (PCNC) for specifying and enforcing security in SDNs with interacting administrative domains. Like Proof-Carrying Authorization (PCA), PCNC provides methods for managing authorization domains, and like Proof-Carrying Code (PCC), PCNC provides methods for enforcing behavioral properties of network programs. We develop theoretical foundations for PCNC and evaluate it in simulated and real network settings, including a case study that considers security in IoT networks for home health monitoring.

Preprints

Scaling of inefficiencies in the U.S. equity markets: Evidence from three market indices and more than 2900 securities

February 2019 by David Rushing Dewhurst, Colin M. Van Oort, John H. Ring IV, Tyler J. Gray, Christopher M. Danforth, Brian F. Tivnan

Using the most comprehensive, commercially-available dataset of trading activity in U.S. equity markets, we catalog and analyze dislocation segments and realized opportunity costs (ROC) incurred by market participants. We find that dislocation segments are common, observing a total of over 3.1 billion dislocation segments in the Russell 3000 during trading in 2016, or roughly 525 per second of trading. Up to 23% of observed trades may have contributed the the measured inefficiencies, leading to a ROC greater than $2 billion USD. A subset of the constituents of the S&P 500 index experience the greatest amount of ROC and may drive inefficiencies in other stocks. In addition, we identify fine structure and self-similarity in the intra-day distribution of dislocation segment start times. These results point to universal underlying market mechanisms arising from the physical structure of the U.S. National Market System.